Background

I am currently working on a large winforms code base.

It has 60+ Visual Studio projects in a single Solution.

The project dependencies are “V” shaped. Many projects are dependent on a few projects, that are dependent on even fewer projects. So the many projects should be parallelized, after the few projects have been build in sequence.

Getting started

First of all I needed to get a base measurement of how long the build takes.

I don't trust ReBuild to not cheat, so I deleted all source files on disk and got them again from source control.

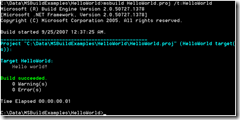

Next Visual Studio does not show build times, so I used MSBuild from the concole. It is very easy, because a sln file is a MSBuild file. ex: “msbuild my-solution.sln” and the total build time is showed when it is finished.

Analysis

So my build was taking approximately 4 minutes I needed to find the low hanging fruits, that would make a huge impact with minimum of work and change.

Adding a parameter to msbuild helped me do that. ex: “msbuild my-solution.sln /v:diag”, now the build took longer but I could see what it was doing. One thing immediately caught my attention, the creation of a COM wrapper for MSHTML.dll. It took almost a minute, that's 25% of the total time. So I changed the reference from the COM to the PIA (Primary Interop Assembly).

During my analysis I repeatedly had to delete the local files and get them from source control, and I noticed that my source code files and 3rd party dll’s was approximately 200MB. After the build was done the folder was almost 3GB!

The sinner was a little property called “copy local” automatically set to true in every project in Visual Studio (except for the system references).

So I set all projects references to copy local false, and the disk writes went down – way down.

But I had created a new problem, I could not start the program any longer because the main exe file did not have all the dll references copied (the windows services and websites did not start either). So In order to enable “F5” again I set all the projects output path to the same bin folder.

Hardware

My main laptop is shipped with a Samsung SSD 64GB Hard drive, so I needed an additional hard drive for my WMs and growing code base. I ordered an Icy Box with USB power and esata, and a WD Velocy Raptor 10000RPM Hard drive (the fastest non SSD out there?). But the greedy Velocy Raptor was too much for the USB power, so I ended up ordering a WD Black 7200RPM HDD.

So basically I had a SSD, a fast 7200RPM and a 10000RPM drive, so why not let them duke it out :-)

Actually I added another option, a RAM Drive like this one http://stevesmithblog.com/blog/using-ramdisk-to-speed-build-times/

Build times in seconds, less is better.

The first think to notice is that the SSD is the slowest of all the disks! Now I am no expert, but the SSD runs the OS and all the applications so it has other duties too. It is also kind of an early SSD, I think the new Intel X25 has better performance for builds (lots of small reads and writes). It is a really good disk for my OS and applications, every thing runs really smooth.

Next thing to notice is the RAM drive flattens as there are less disk activity, it looks like it becomes a bottleneck(maybe the compiler is greedy on the RAM bus?).

Last thing to notice is the 7200RPM and 10000 RPM drives are almost equally fast, when building to the same bin folder with copy local = false. And the drive is a lot cheaper.

Conclusion

Remember this is NOT universally applicable. But looking for things that take a long time to compile, like the COM reference or reducing disk reads/writes is. Going from 4 minutes to under 1 minute is not too bad.

2 comments:

First of all - nice analysis.

The disk speed thing pretty much repeats what we found out at my previous project when testing SSDs - .NET compilation is CPU bound, not I/O bound. So a bigger CPU = win. Actually, since the compilation is single-threaded by default, dual-core PCs often beat quad-cores for pure compilation (obviously the quad has many other advantages).

Did you experiment with the /m msbuild option for running things in parallel? You hinted at the start of the post but never got back to it. We parallelized our test projects and found /m:4 worked best for our dual-core workstations YMMW :-)

Also, take a look at Thomas Ardal's msbuild profiler. It's pretty simple but it's very useful. It's over at codeplex: http://msbuildprofiler.codeplex.com/

- Rasmus.

All builds where with /m:2, thats the number of cores my laptop has. tried with /m:4 and did get som exciting results - but I could not very them the next day? Perhaps I did somthing wrong the first time.

Thanks for the tip about the msbuild profiler.

Post a Comment